Abstract:

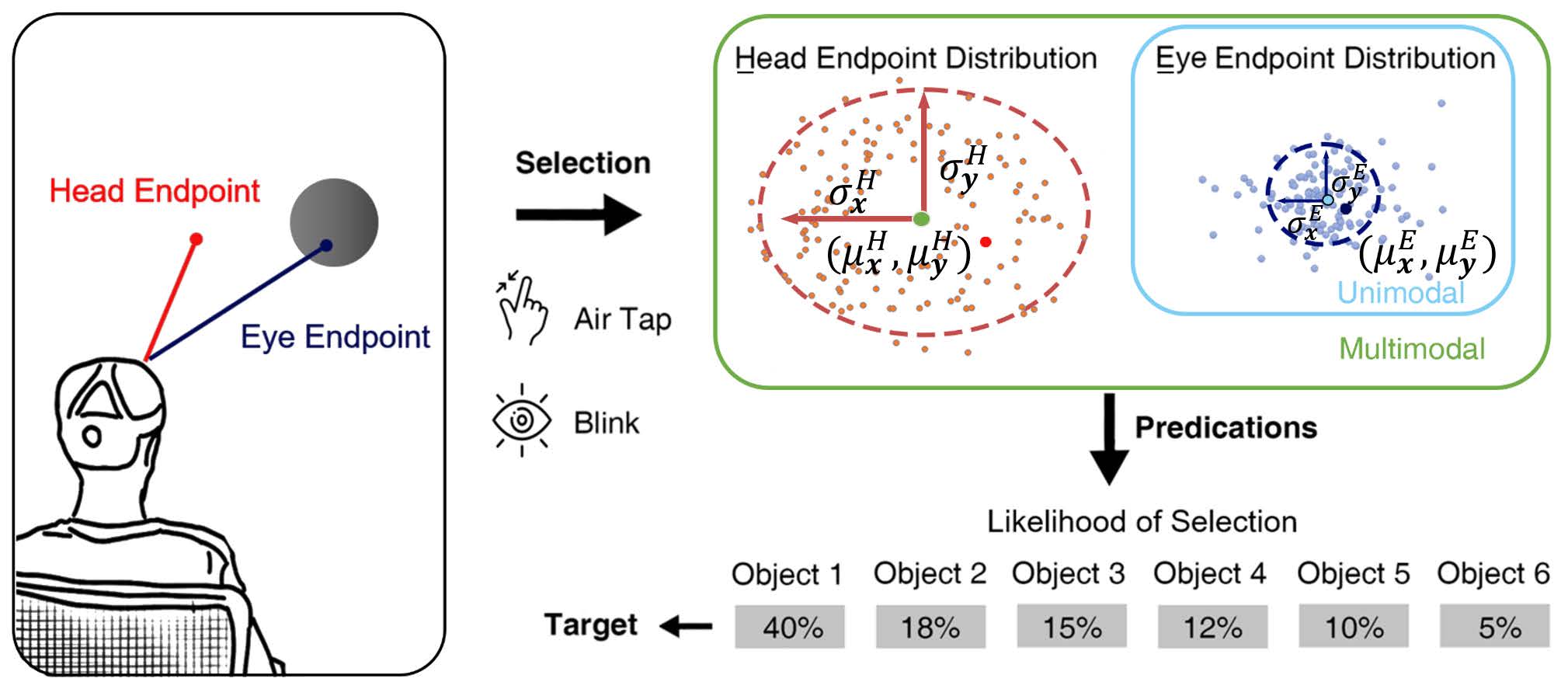

Target selection is a fundamental task in interactive Augmented Reality (AR) systems. Predicting the intended target of selection in such systems can provide users with a smooth, low-friction interaction experience. Our work aims to predict gaze-based target selection in AR headsets with eye and head endpoint distributions, which describe the probability distribution of eye and head 3D orientation when a user triggers a selection input. We first conducted a user study to collect users’ eye and head behavior in a gaze-based pointing selection task with two confirmation mechanisms (air tap and blinking). Based on the study results, we then built two models: a unimodal model using only eye endpoints and a multimodal model using both eye and head endpoints. Results from a second user study showed that the pointing accuracy is improved by approximately 32% after integrating our models into gaze-based selection techniques.